Strand Integration

We propose a refinement method for hair geometry by incorporating the gradient of strands into the computation of their position. The basic concept of this method is to harness the position and direction information for improving geometrical coherence. If we move along a 3D line following its direction, we will likely encounter another 3D line that represents another piece of the same strand. By following successive 3D lines, we can determine the shape of an entire strand of hair. We formulate this relationship similar to normal integration but for 3D lines.

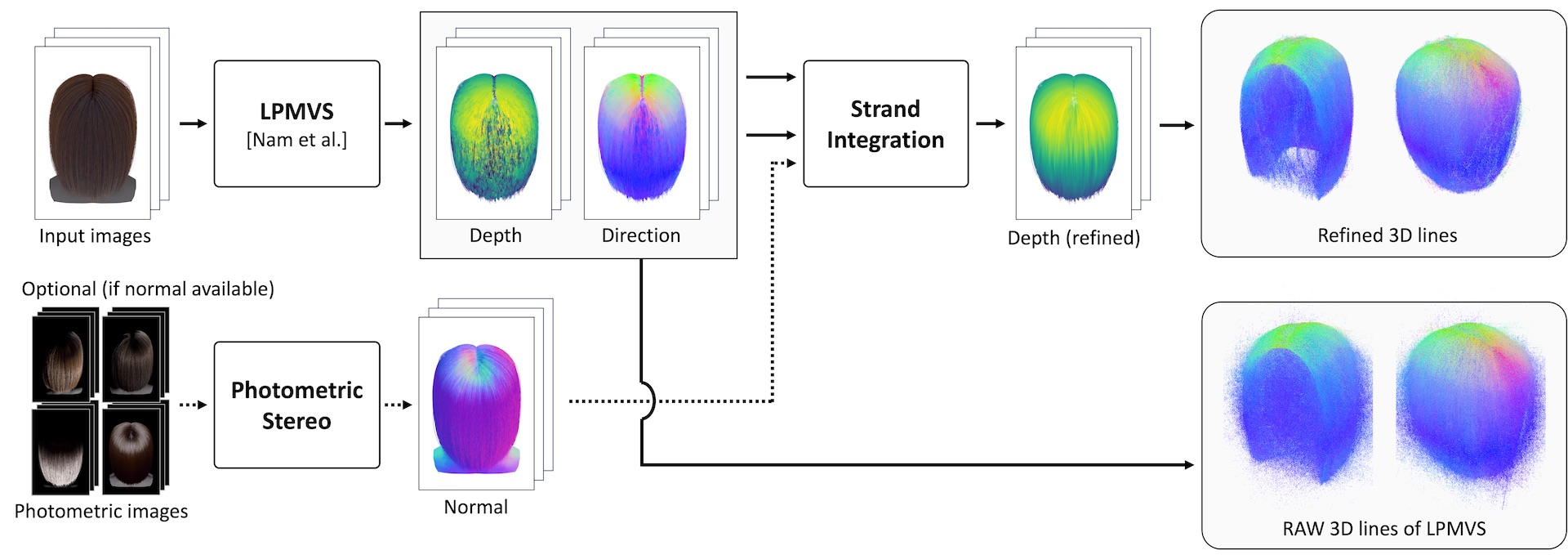

Overall Pipeline

The input images are captured under a multi-view camera condition. As a preprocessing, we reconstruct 3D lines (depth and direction) using LPMVS. These 3D lines become the input to our method, Strand Integration, which refines the depth map for each view. While our method can work without any extra input, we can also optionally accept a normal map as an additional input. We can obtain the hair geometry as a set of 3D lines by accumulating.

Results

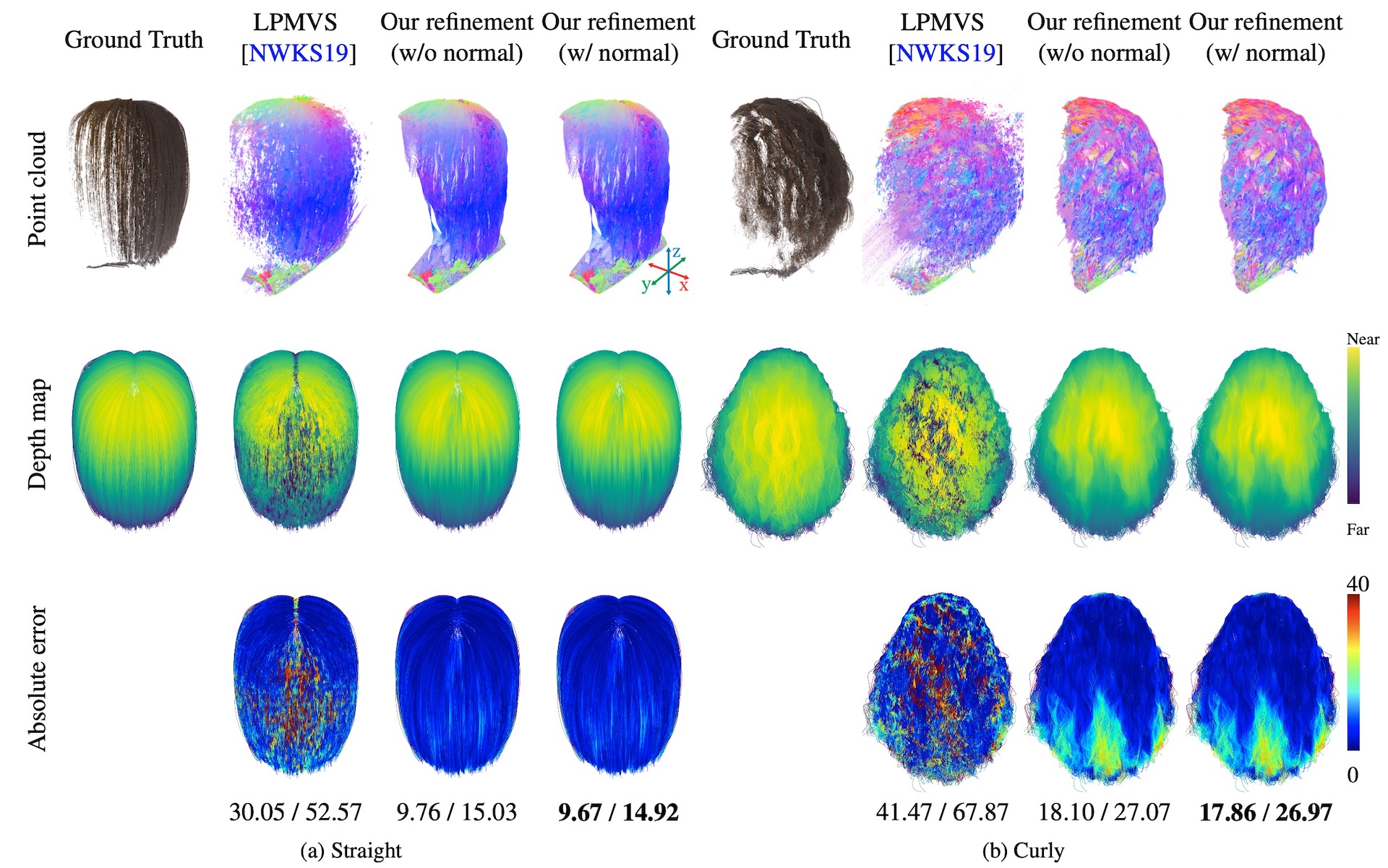

Synthetic data

We evaluated our method using a synthetic data containing four hairstyles. We evaluated with MAE/RMASE of the depth map, and achived lower error compared to LPMVS.

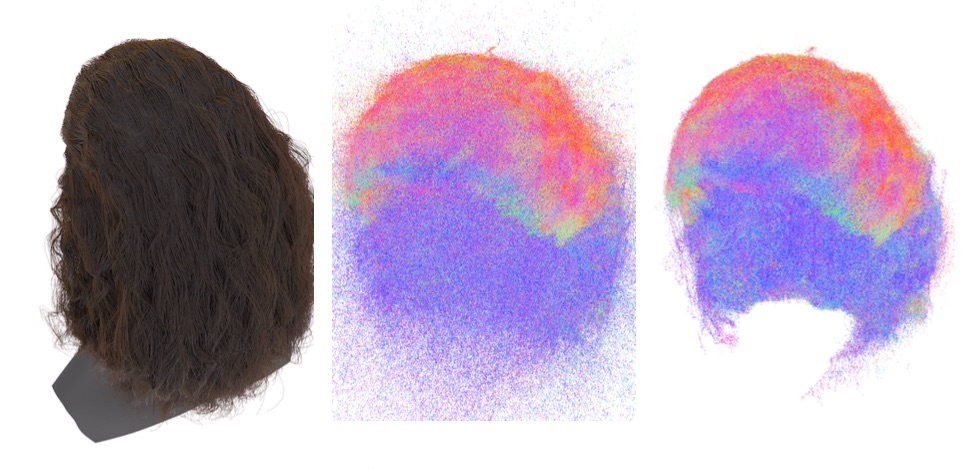

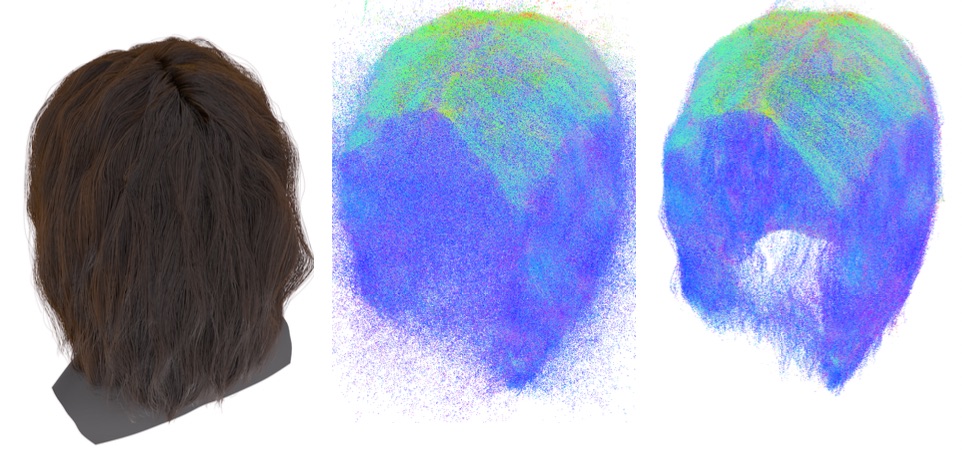

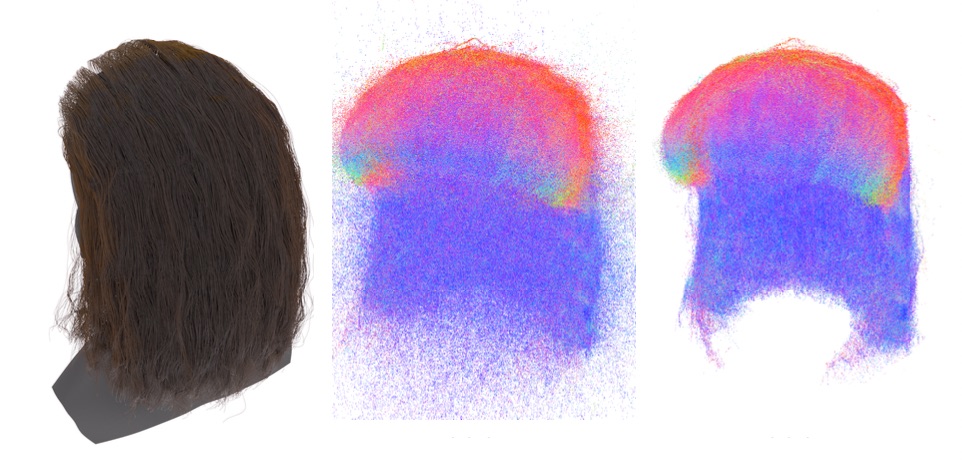

We also evaluated with merged 3D points from all views. Our method reduced the noisy points.

Straight

Curly

Wavy

Wavy Thin

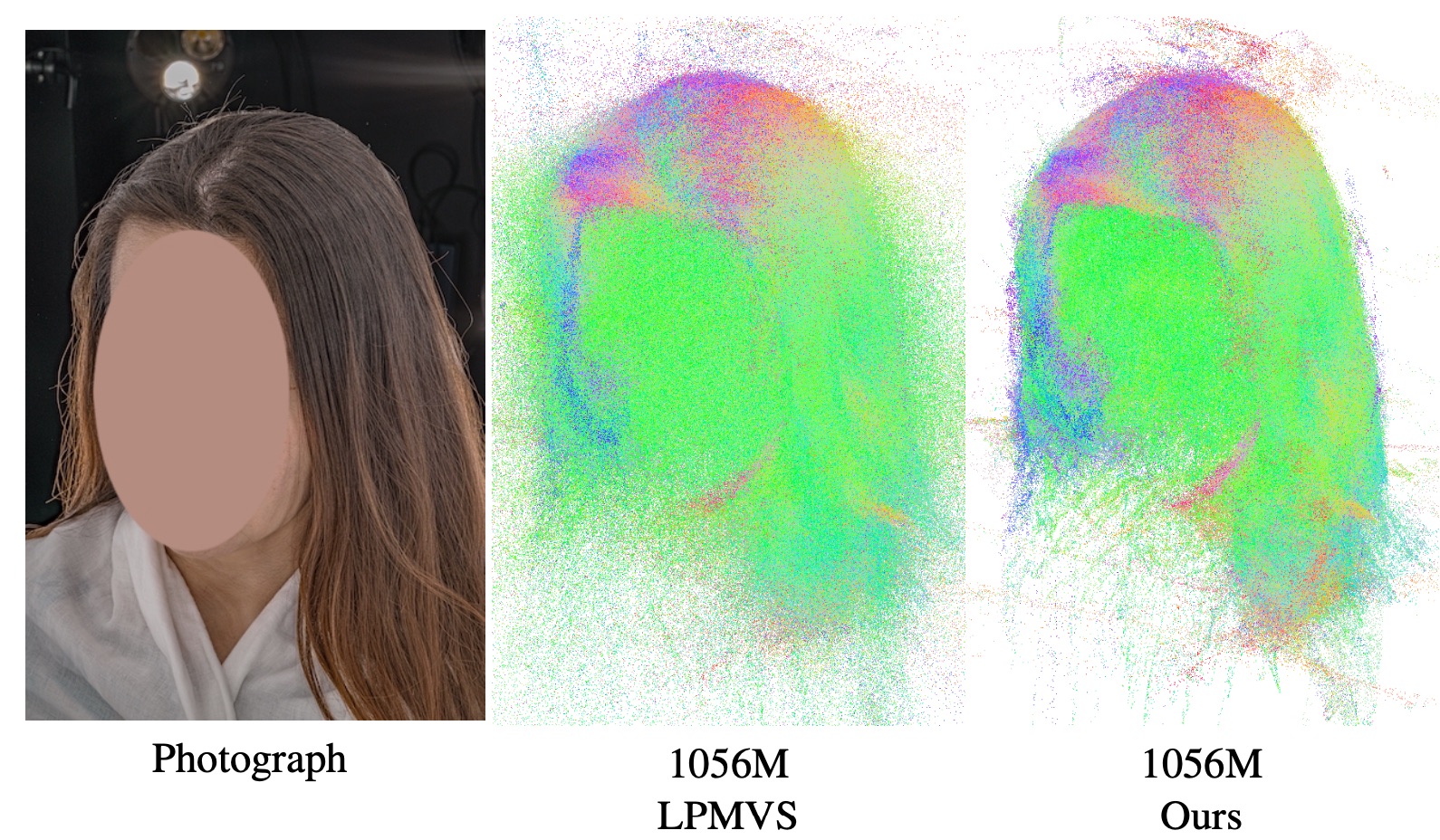

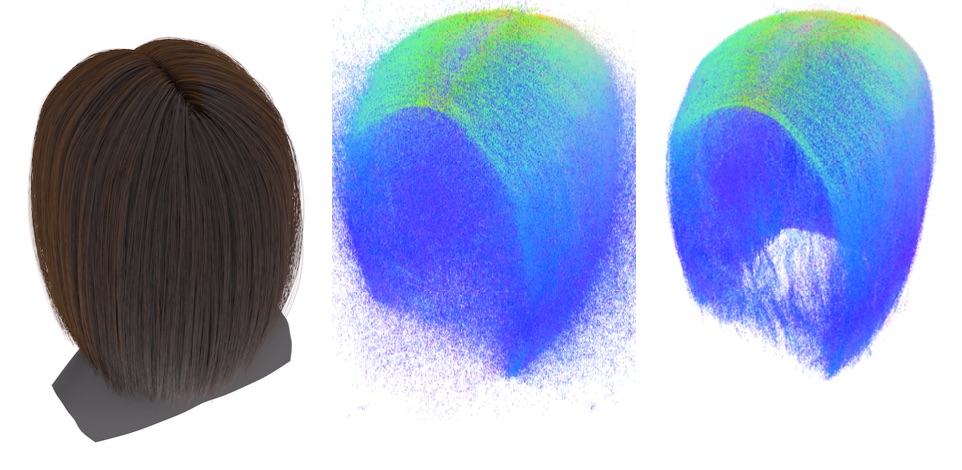

Real data

Also in a real capture scenario, our refinement method achieves less noise and denser 3D lines.